Previously, I walked folks through building an API to help you store and retrieve information about what you’ve planted in your garden. Aside from being a rather extensive demonstration of how ngrok’s Kubernetes Ingress Controller makes deployments a breeze, I always felt like it was missing a solution to an even more critical problem I have with gardening.

I like the idea of having a green thumb, but not enough to spend all the time researching what to plant and when.

Since then, I’ve shown how to securely access remote AI workloads and deploy AI services on a customer’s data plane using ngrok, which got me thinking: Could I use AI to shortcut my path to a green thumb? Or anyone’s path to having a solid plan for their vegetable garden now that springtime is upon us? Could I deploy an open-source LLM to Azure, make completions available on an API to integrate into an app, and maybe even do a little fine-tuning in the future?

Absolutely.

Aside from walking you through my unicorn-esque startup idea, we will hone in on a must-have of any production-grade, public-facing deployment: authentication. This is especially crucial when running large language models (LLMs) anywhere, as they’re a fantastic vector for attack and abuse. In the past, adding authentication to these APIs has been overly complex or expensive—but thanks to ngrok’s recently developer-defined API Gateway, fixing this glaring flaw in your infrastructure has gotten much more developer-friendly.

The problem with API integrations and existing solutions

Any time you want to integrate an external service into your app using an API, you’re expanding your attack surface. Creating more opportunities for attackers to listen in and extract data.

For example, tools like Ollama, which I’ve covered in the past, create an OpenAI-compatible API server upon deployment—one without authentication. You can build workarounds using nginx reverse proxies or basic auth with Caddy, but those only passively block requests from folks who don’t belong. It doesn’t actively require them to prove they’re allowed to access your API.

This situation repeats every time you integrate any service or open-source tool with an API into an app you’re developing. Because you can’t easily alter their code to enable your favorite method and service for authentication, you’re completely on the hook for the information available on the API, who can access it, and whatever harmless or nefarious intentions they might have.

There are plenty of viable solutions to this problem already. You can deploy the service/tool to your virtual private cloud (VPC) and only allow requests from within, but that means you’ll need to loop in operations folks to help with your deployment, and doesn’t make local testing tricky. Traditional self-hosted or cloud-based API gateways can also give you the authentication you need, but they’re pricey and require ongoing coordination with operations, networking, and security teams.

We’re going to take a different strategy altogether, using the following “stack”:

-

Microsoft Azure for hosting our open-source LLM.

-

SkyPilot, an open-source framework for running AIs on any GPU-accelerated cloud, to deploy a cluster on Azure and automatically install all the prerequisites.

-

Mixtral 8x7B, an open-source LLM that matches or outperforms GPT3.5 on most benchmarks.

-

ngrok to route and authenticate all incoming requests using the new developer-defined "API gateway" with JSON web tokens (JWTs).

JWTs are an efficient open standard for submitting signed data as a JSON object, including a header, payload, and signature. They’re encrypted by default and can be revoked by the issuer (in this case, you), making them an ideal for protecting API endpoints—especially those involving lots of confidential data and compute power, like an AI-powered service.

You’ll learn a repeatable process, using ngrok’s new API Gateway, you can apply to any API you need to quickly and securely integrate into your own unicorn-esque idea.

Deploying an open-source LLM on Azure with SkyPilot

SkyPilot is an open-source framework for deploying LLMs and AI workloads on multiple clouds with simple scalability and access to GPU compute. Once you install SkyPilot on your local workstation and set up your Azure credentials, you can create a serve.yml file to tell SkyPilot how to provision a cluster. We’re using a modified version of the example YAML the project provides, mostly for simplicity.

resources:

cloud: azure

accelerators: {A100-80GB:2, A100-80GB:4, A100:8, A100-80GB:8}

ports: 8000

setup: |

conda activate mixtral

if [ $? -ne 0 ]; then

conda create -n mixtral -y python=3.10

conda activate mixtral

fi

pip install transformers==4.38.0

pip install vllm==0.3.2

pip list | grep megablocks || pip install megablocks

run: |

conda activate mixtral

export PATH=$PATH:/sbin

python -u -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 \

--model mistralai/Mixtral-8x7B-Instruct-v0.1 \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE | tee ~/openai_api_server.logYou can then deploy the LLM cluster:

$ sky launch -c mixtral ./azure_serve.yaml

Task from YAML spec: ./azure_serve.yaml

I 03-20 09:31:10 optimizer.py:691] == Optimizer ==

I 03-20 09:31:10 optimizer.py:702] Target: minimizing cost

I 03-20 09:31:10 optimizer.py:714] Estimated cost: $7.3 / hour

I 03-20 09:31:10 optimizer.py:714]

I 03-20 09:31:10 optimizer.py:837] Considered resources (1 node):

I 03-20 09:31:10 optimizer.py:907] --------------------------------------------------------------------------------------------------------

I 03-20 09:31:10 optimizer.py:907] CLOUD INSTANCE vCPUs Mem(GB) ACCELERATORS REGION/ZONE COST ($) CHOSEN

I 03-20 09:31:10 optimizer.py:907] --------------------------------------------------------------------------------------------------------

I 03-20 09:31:10 optimizer.py:907] Azure Standard_NC48ads_A100_v4 48 440 A100-80GB:2 eastus 7.35 ✔

I 03-20 09:31:10 optimizer.py:907] Azure Standard_NC96ads_A100_v4 96 880 A100-80GB:4 eastus 14.69

I 03-20 09:31:10 optimizer.py:907] Azure Standard_ND96asr_v4 96 900 A100:8 eastus 27.20

I 03-20 09:31:10 optimizer.py:907] Azure Standard_ND96amsr_A100_v4 96 1924 A100-80GB:8 eastus 32.77

I 03-20 09:31:10 optimizer.py:907] --------------------------------------------------------------------------------------------------------

I 03-20 09:31:10 optimizer.py:907]

Launching a new cluster 'mixtral'. Proceed? [Y/n]:As you can see, SkyPilot is using your Azure credentials and the accelerators you specified to show you options for sheer GPU compute power versus cost. Hit Y to let SkyPilot do its thing, which could take 10-15 minutes.

Once you see Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit), you can safely end the session.

Your custom LLM is accessible via an API… but currently operates entirely without authentication.

Depending on whether your cloud provider makes port 8000 accessible to the public internet at large, anyone could access your LLM and create completions of their own, potentially compromising your customer data or simply wasting expensive GPU resources.

Even if you can’t access your API at an IP address like 123.45.67.89:8000 directly using curl from your local machine, it’s never a good idea to believe that just because you can’t get something to work, no one else will.

Time to fix the flaw.

A few half-hearted authentication methods

You may throw an nginx reverse proxy in front of your API, add IP restrictions or access control lists, and call it a day. These solutions are, however, limited:

-

IP restrictions and access control lists (ACLs) require specific addresses/identifiers to allow or block, potentially creating more maintenance work or downtime when an IP address changes unexpectedly.

-

They require additional infrastructure, creating more maintenance burden.

-

They lack features most want from their APIs, like rate limiting or JSON Web Token (JWT) authentication to protect your services from abuse, or robust developer documentation to coordinate your team’s efforts on building your SaaS around these new AI insights.

Your other option is to collaborate with your Ops teams to deploy a complex and expensive traditional API gateway. Your first hurdle will be finding space in your budget for hundreds or thousands of monthly cloud bills. If you can get past that, you’ll still need weeks or months to coordinate complex networking configurations and learn about maintaining even more infrastructure.

Definitely not ideal for AI—if you want to win out against the flood of new contenders and technology, you better be ready to move fast, and ensure anything to put into production has rock-solid security. With every trend comes a flood of attackers.

Add ngrok to your LLM cluster for secure ingress

ngrok dramatically simplifies securely routing public traffic to any origin service. To achieve this, you need to deploy the ngrok agent on your new Mixtral cluster to Azure.

SkyPilot offers two methods of executing additional commands on your LLM cluster to perform additional tasks or maintenance: using sky exec YOUR_CLUSTER … or SSH-ing into the VM itself.

Installing and running ngrok, particularly in a headless mode, will be easier when connected directly via SSH.

The good news is that SkyPilot has already tweaked your SSH config to include the IP address for your new Mixtral cluster, so you can connect with ssh mixtral.

Log in to your ngrok dashboard to find the right ngrok agent installation method for your cloud provider. With Azure, your node will use Ubuntu, which means you can quickly install ngrok via apt, then configure the ngrok agent with your authtoken

Head back to your ngrok dashboard once again to create a new domain for secure ingress to the API.

Then start the ngrok agent, connecting {YOUR_NGROK_DOMAIN} to port 8000 on the node’s localhost.

ngrok http 8000 --url={YOUR_NGROK_DOMAIN}Test out your first AI completions

Run your first completion, requesting a gardening plan, using curl:

curl -L https://{YOUR_NGROK_DOMAIN}/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "mistralai/Mixtral-8x7B-Instruct-v0.1",

"prompt": "Create a plan for sowing seeds and transplanting seedlings into a vegetable garden of two garden beds, 10 feet in length and 3 feet in width. The planting will occur mid-March, in Tucson, Arizona, which is USDA Zone 9b.",

"max_tokens": 5000

}'You’ll get an answer like the following, which has been nicely re-formatted from the JSON output:

To create a plan, the following factors must be taken into consideration:

- The vegetable varieties to be grown

- The number of plants desired

- The optimal spacing for each vegetable variety

- The anticipated growth rate and duration of each vegetable variety

- Whether to sow seeds directly in the garden beds or transplant seedlings from a greenhouse or indoor starting area

Based on these factors, consider the following plan for a March 15th planting in Tucson, Arizona:

Garden Bed 1:

1.

Sow 1 row of radishes (approx.

10 seeds) in the front half of the bed, 1" apart and 1/2" deep, with 2" between rows.

2.

Sow 2 rows of lettuce (approx.

20 seeds) in the back half of the bed, 4" apart and 1/4" deep, with 6" between rows.

Garden Bed 2:

1.

Transplant 4 bell pepper seedlings in the front half of the bed, 18" apart.

2.

Transplant 4 tomato seedlings in the back half of the bed, 24" apart.

This plan takes into consideration the optimal spacing and anticipated growth rates for each vegetable variety, and allows for a diversified planting that includes spring crops such as radishes and lettuce, as well as warm-weather crops such as bell peppers and tomatoes.

It's important to note that due to the hot climate and limited growing season in Tucson, Arizona, it's crucial to start seedlings indoors or in a greenhouse, and make sure to protect them from heat and direct sun during the hottest parts of the day. Also, a drip irrigation or soaker hoses can be used to ensure consistent watering and conserve water in the desert climate.Any lackluster gardening skills and intuition are now assisted salvaged by AI—green thumbs, here we come.

That’s good news, but even though you’ve added ngrok to securely tunnel traffic from your chosen domain name, you still haven’t solved the fundamental issue around layering authentication on top of your API. Time to really fix that with ngrok.

Using ngrok as an authenticate API gateway with a Traffic Policy

ngrok recently released its developer-defined API Gateway, which gives you all the benefits of ngrok’s Cloud Edge plus traffic routing, rate limiting, request/response manipulation, and, last but not least, JWTs for securing your APIs with authentication. With this new style of API Gateway, which discards all the complexity you’d find with traditional API Gateways like Kong or AWS API Gateway, you can innovate without any hurdles, using smart guardrails you or an operations team establishes, with simplicity and security at the forefront.

You can activate ngrok’s API Gateway features through a Traffic Policy, which assigns inbound/outbound rules that control traffic from your upstream service—your Mixtral LLM and its OpenAI-compatible API server. You’ll enable the API Gateway’s JWT Validation Action using Auth0, but first, you’ll need to create an API in Auth0.

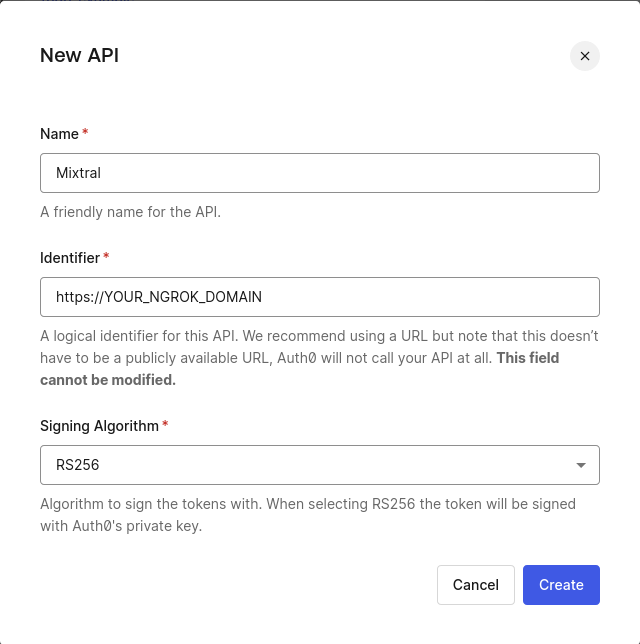

Create an account or log in, and click Applications->APIs, then + Create API.

Put YOUR_NGROK_DOMAIN in for the Identifier as shown below:

To set up the ngrok Traffic Policy, you need your Auth0 Tenant Name, which you can find in the top-left corner of the Auth0 dashboard, next to the Auth0 logo.

Create a new file called mixtral-policy.yaml on your Mixtral cluster with the YAML content below, replacing both instances of YOUR_AUTH0_TENANT_NAME in the YAML below, along with YOUR_NGROK_DOMAIN in the audience.allow_list.value field.

on_http_request:

- actions:

- type: "jwt-validation"

config:

issuer:

allow_list:

- value: "https://{YOUR_AUTH0_TENANT_NAME}.us.auth0.com/"

audience:

allow_list:

- value: "{YOUR_NGROK_DOMAIN}"

http:

tokens:

- type: "jwt"

method: "header"

name: "Authorization"

prefix: "Bearer "

jws:

allowed_algorithms:

- "RS256"

keys:

sources:

additional_jkus:

- "https://{YOUR_AUTH0_TENANT_NAME}.us.auth0.com/.well-known/jwks.json"Run the ngrok agent again, this time referencing your new mixtral-policy.yaml file:

ngrok http 8000 --url={YOUR_NGROK_DOMAIN} --policy-file mixtral-policy.yamlWhen you curl your API endpoint again, you’ll get an HTML response containing a warning: Your request lacks valid credentials. (ERR_NGROK_3401).

Errors are good at this point!

It means you’ve successfully enabled authentication via OAuth and Auth0, preventing anyone who does not have an access token from accessing your open-source LLM in any capacity.

Time to get a token of your own.

Click on the Test tab for your Auth0 API.

You’ll see an example CURL request pre-filled with your Tenant Name and client_id/client_secret, which Auth0 already created for a new machine-to-machine (M2M) application.

Copy-paste and run that curl command, to which you’ll receive an access_token in response.

You can run your curl request for a gardening plan again, including your access token in a new authorization: Bearer header:

curl -L https://YOUR_NGROK_DOMAIN/v1/completions \

--header "Content-Type: application/json" \

--header "authorization: Bearer ACCESS_TOKEN" \

-d '{

"model": "mistralai/Mixtral-8x7B-Instruct-v0.1",

"prompt": "Create a plan for sowing seeds and transplanting seedlings into a vegetable garden of two garden beds, 10 feet in length and 3 feet in width. The planting will occur mid-March, in Tucson, Arizona, which is USDA Zone 9b.",

"max_tokens": 5000

}'Behind the scenes, ngrok’s Cloud Edge accepts your requests and verifies the JWT is present, is valid/not expired/properly encrypted, came from a valid issuer (Auth0), and is intended for your origin service. Because you’ve now supplied a proper access token from Auth0, ngrok will forward your request for a brand-new gardening plan to your API.

What’s next?

The great thing about creating a secure API so quickly is that you still have plenty of time to explore all the exciting opportunities ahead:

-

Integrate your newly-authenticated API and the gardening wisdom of your open-source LLM directly into your app. You can find example code in many languages from Auth0, auto-populated with your Tenant Name,

client_id, andclient_secretin the Test tab from the last step. -

Extend your ngrok API Gateway with features like rate limiting.

-

Learn more about ngrok’s new API Gateway, Traffic Policy engine, and JWT validation features through our existing resources and documentation:

-

Copy-paste your

access_tokeninto JWT.io to learn more about how authorization details are encoded into JWTs. -

Experiment with fine-tuning your open-source LLM using examples from SkyPilot. Unlike other open-source tools we’ve previously covered, like Ollama, SkyPilot lets you upload training data to your self-hosted LLM and run tools like torchrun. You might even upload a new corpus of gardening data to make your plans even more sophisticated or accurate for various edge cases!

-

Connect with us on Twitter or the ngrok community on Slack to let us know how you’re using the new ngrok Gateway API.

-

Give me a seat on the board of your next AI-based garden planning startup as a token of appreciation.

-

Apply this essential authorization layer to every service, open-source tool, or API you integrate into your production-grade apps. With ngrok and the developer-defined API Gateway, you can implement security best practices, which used to take weeks of coordination with operations/networking teams or hinged on iffy solutions like IP restrictions and ACLs, in a few minutes.Sign up for ngrok today and get started securing and integrating your APIs.