Kubernetes ingress to services in a Consul service mesh

The ngrok Kubernetes Operator is our official open-source controller for adding public and secure traffic to your k8s services. It works on any cloud, local, or on-prem Kubernetes cluster to provide

Consul is a secure and resilient service mesh that provides service discovery, configuration, and segmentation functionality. Consul Connect provides service-to-service connection authorization and encryption using mutual TLS, automatically enabling TLS for all Connect services. Consul can be used with Kubernetes to provide a service mesh for your Kubernetes cluster.

Together, Consul provides a robust and secure way for Services within a cluster to communicate, while ngrok can seamlessly and securely provide public ingress to those services. This guide will walk you through setting up a Consul Service Mesh on Kubernetes and then using the ngrok Kubernetes Operator to provide ingress to your services to illustrate how they can work together.

What you'll need

- A remote or local Kubernetes cluster with Consul installed OR minikube to set up a demo cluster locally.

- An ngrok account.

- kubectl and Helm 3.0.0+ installed on your local workstation.

- A reserved domain, which you can get in the ngrok

dashboard or with the ngrok

API.

- You can choose from an ngrok subdomain or bring your own custom branded

domain, like

https://api.example.com. - We'll refer to this domain as

<NGROK_DOMAIN>.

- You can choose from an ngrok subdomain or bring your own custom branded

domain, like

Set up a local Consul Service Mesh on Kubernetes

For this guide, we'll need access to a remote or local Kubernetes cluster with Consul installed. If you have an existing cluster with Consul set up, you can skip this step and proceed to Step 2: Install the ngrok Kubernetes Operator.

If you don't have one set up, we'll set up a local Minikube cluster and install Consul now.

- Create a local cluster with minikube

Loading…

- Create a file called

values.yamlwith the following contents:

Loading…

- Install the Consul chart

Loading…

Depending on your computer, this can take some time for the pods to become healthy. You can watch the status of the pods with kubectl get pods --namespace consul -w

- Verify Consul is installed and all its pods are healthy

Loading…

We now have a Kubernetes cluster with a Consul service mesh installed.

These steps are based on Consul's Tutorial Consul Service Discovery and Service Mesh on Minikube

Configure the ngrok Kubernetes Operator

Consul requires a bit of extra configruation to work with ngrok's Operator for Kubernetes ingress. You'll need to use a pod annotation to enable the Consul Connect sidecar injector. This will allow us to use Consul Connect to secure the traffic between the ngrok Kubernetes Operator and our services.

-

First, create a Kubernetes Service for the ngrok Kubernetes Operator. Consul relies on this to name our services to declare Service Intention

sourceanddestinationvalues. We'll create a Kubernetes Service for the ngrok Kubernetes Operator in theconsulnamespace.Loading…

-

Install the ngrok Kubernetes Operator

Next, install the ngrok Kubernetes Operator into our cluster. We want the controller pods to be in the Consul service mesh in order to proxy traffic to our other services. We'll use pod annotations to enable the Consul Connect sidecar injector and allow outbound traffic to use the Consul mesh. Consul documents how to set these 2 annotations in the Configure Operators for Consul on Kubernetes doc.

Loading…

Check out our Operator installation doc for details on how to use Helm to install with your ngrok credentials. Once you've done that, you can run the command below to set the appropriate annotations.

Loading…

- HashiCorp's docs also mention the annotation

consul.hashicorp.com/transparent-proxy-exclude-inbound-ports. This is not applicable to the ngrok Kubernetes Operator as we create an outbound connection for Ingress rather than exposing ports. - The

--set-stringflag allows the pod annotation to escape the.character in the annotation name while ensuring the valuetrueis a boolean and not a string. - In a production environment, or anywhere you wish to use Infrastructure as Code and source control your Helm configurations, you can set up your credentials following this guide.

Install a Sample Application

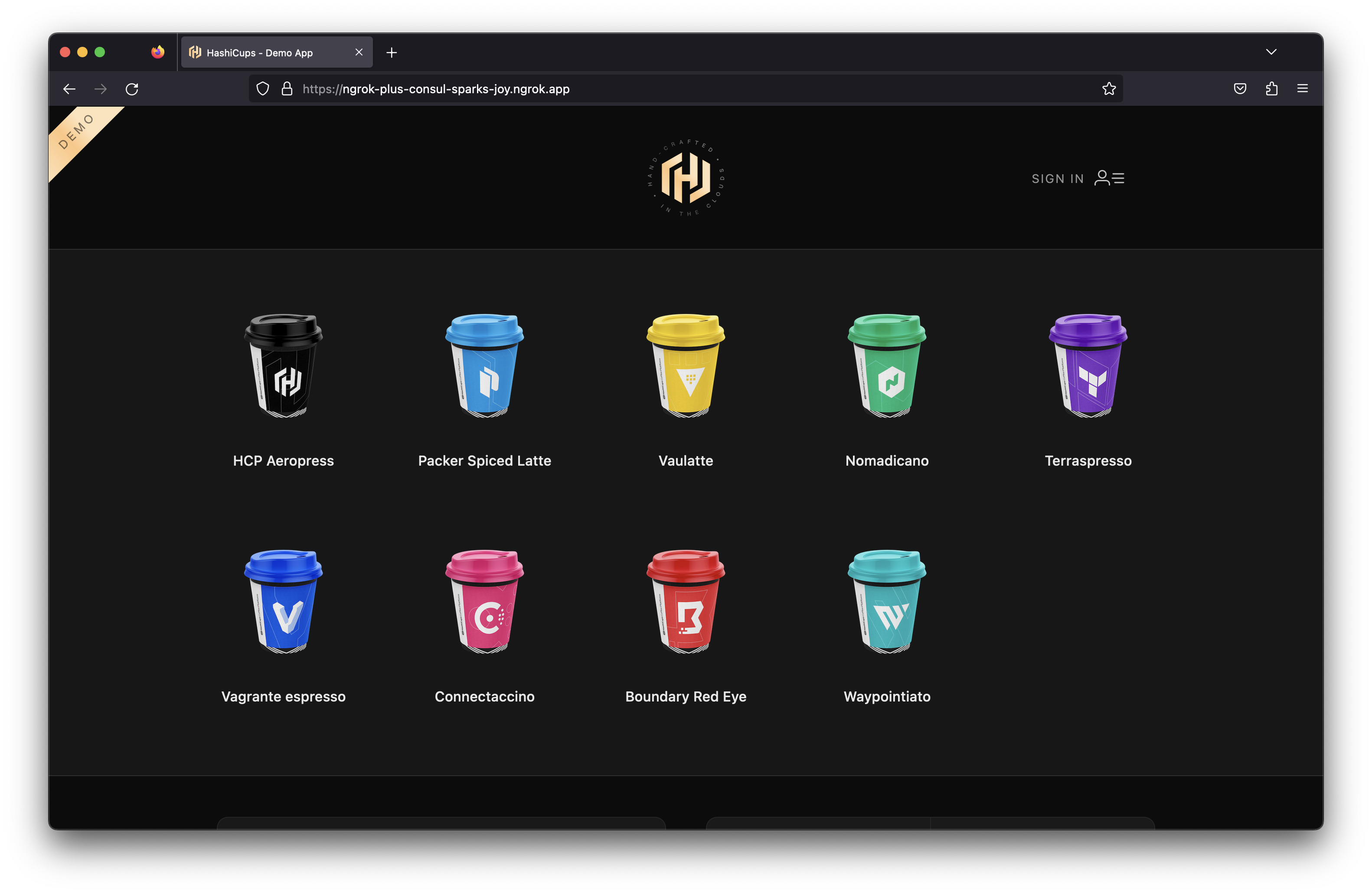

Now let's install a sample application to try out our service mesh and Operator combination. We'll use the HashiCups Demo Application HashiCorp uses for demos and guides such as in their Getting Started with Consul Service Mesh for Kubernetes guide. This application is a simple e-commerce application that allows users to order coffee cups.

The application has a frontend and public API services that are also backed by a private API and database. These communicate with each other through the Consul service mesh. This comes with nginx installed as a proxy for the frontend and Public API services. We'll replace this with ngrok to provide public access and other features.

consul namespace.The ngrok Kubernetes Operator can send traffic to services across different namespaces, but Consul Service Intentions across namespaces require an enterprise account. For now, we'll keep everything in the same namespace.

- Clone the HashiCorp Learning Consul repo. This has multiple great example applications for learning about Consul and Kubernetes. We'll use the HashiCups application for this guide.

Loading…

- Install the HashiCups sample app in the

consulnamespace. This app consists of multiple Services and Deployments that make a tiered application. We'll install all of them from this folder and then modify things from there.

Loading…

- Remove the existing Service Intentions for the

public-apiandfrontendservices and add our own.

Consul has the concept of Service Intentions. In short, they are a programmatic way to configure the Consul Service mesh to allow or deny traffic between services.

HashiCups comes with nginx installed with intentions to the frontend and public-api services. We'll remove these and add our own intentions to allow traffic from the ngrok Kubernetes Operator to the frontend and public-api services.

Loading…

- Create Service Intention from ngrok to HashiCups and the public-api

Loading…

Loading…

Configure Public Ingress for the sample application

Now that the ngrok Kubernetes Operator can communicate with the frontend service and public-api service through the Consul Service Mesh via Service Intentions, we can create an ingress to route traffic to the app. We'll create ingress objects to route traffic to the frontend service and the public-api service.

Loading…

- Uses the

ngrokingress class - The host is the ngrok domain name you selected that is static

- There is a route for

/that routes to thefrontendservice on port3000 - There is a route for

/apithat routes to thepublic-apiservice on port8080

Open your <NGROK_DOMAIN> domain in your browser and see the HashiCups application!

Add OAuth Protection to the App

Let's take your ingress needs a little further by assuming you want to add edge security, in the form of Google OAuth, to the endpoint where your 2048 application is humming along.

With our Traffic Policy system and the oauth

action, ngrok manages OAuth protection

entirely at the ngrok cloud service, which means you don't need to add any

additional services to your cluster, or alter routes, to ensure ngrok's edge

authenticates and authorizes all requests before allowing ingress and access to

your endpoint.

To enable the oauth action, you'll create a new NgrokTrafficPolicy custom

resource and apply it to your entire Ingress with an annotation. You can also

apply the policy to just a specific backend or as the default backend for an

Ingress—see our doc on using the Operator with

Ingresses.

-

Edit your existing ingress configuration with the following—note the new

annotationsfield and theNgrokTrafficPolicyCR.Loading…

-

Re-apply your configuration.

-

When you open your demo app again, you'll be asked to log in via Google. That's a start, but what if you want to authenticate only yourself or colleagues?

-

You can use expressions and CEL interpolation to filter out and reject OAuth logins that don't contain

example.com. Update theNgrokTrafficPolicyportion of your manifest after changingexample.comto your domain.Loading…

-

Check out your deployed HashiCups app once again. If you log in with an email that doesn't match your domain, ngrok rejects your request. Authentication... done!

Now only you can order from HashiCups from anywhere!